regularization machine learning mastery

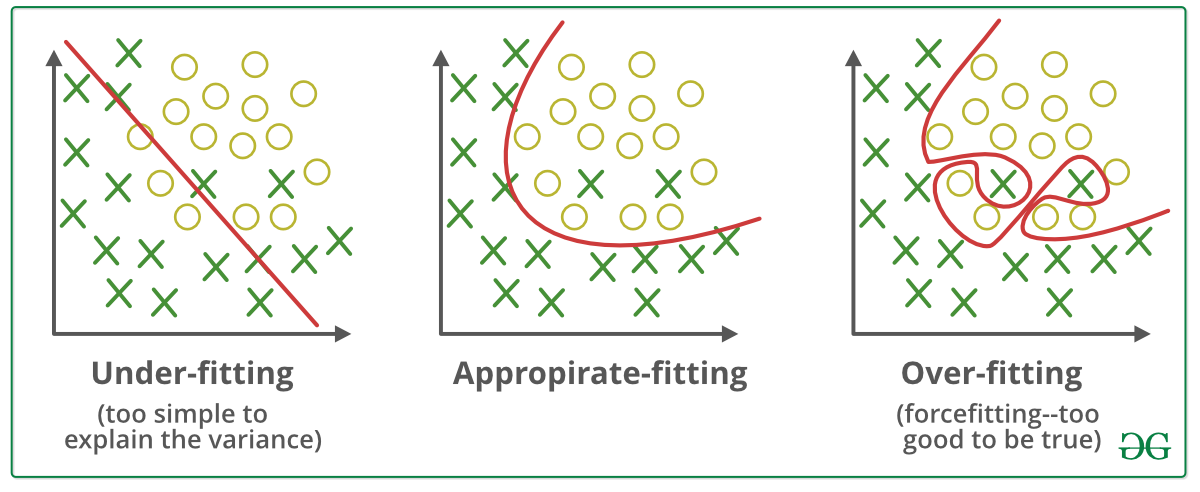

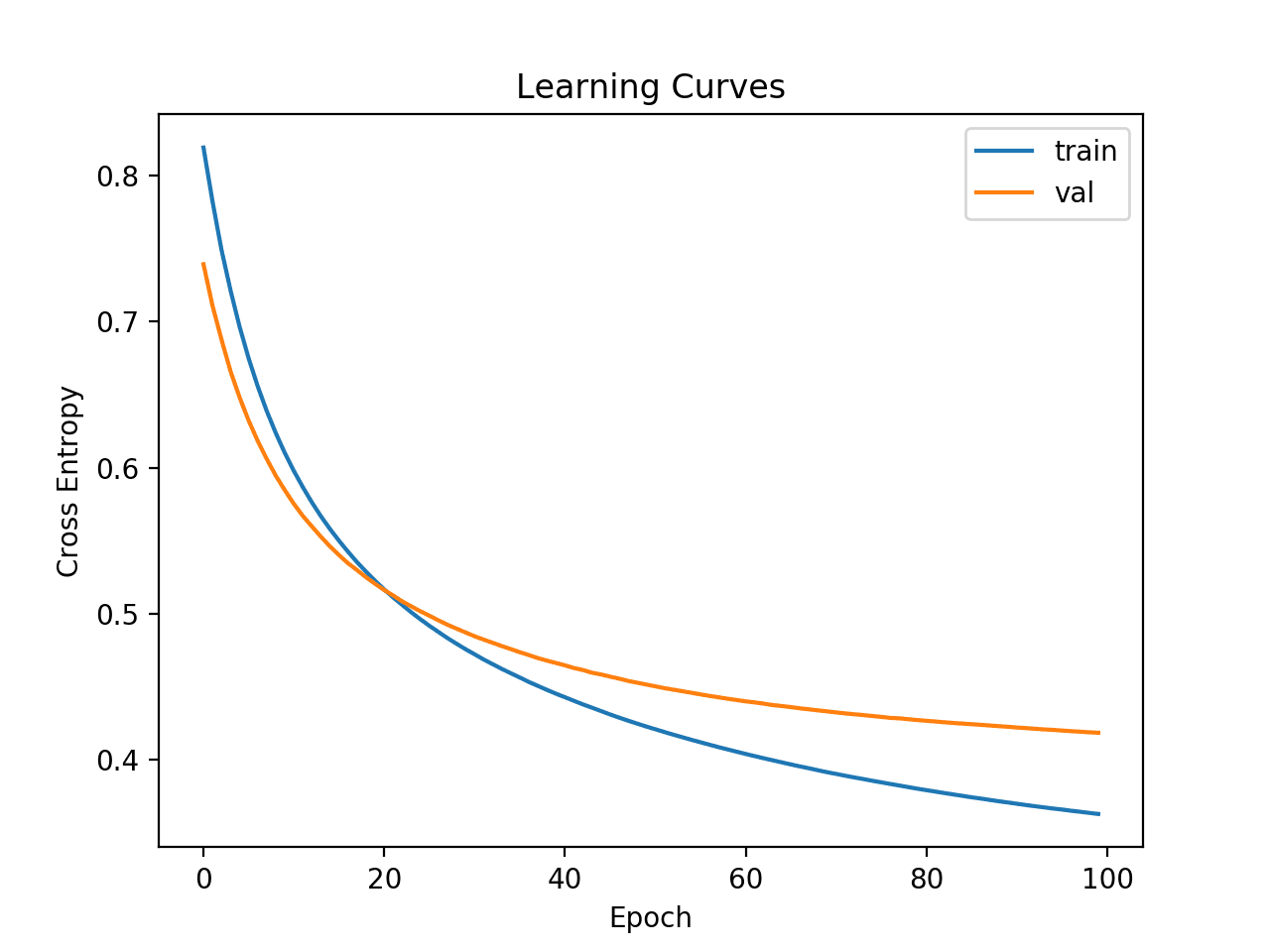

One of the major aspects of training your machine learning model is avoiding overfitting. Part 1 deals with the theory regarding why the regularization came into picture and why we need it.

Regularization In Machine Learning And Deep Learning By Amod Kolwalkar Analytics Vidhya Medium

Part 2 will explain the part of what is regularization and some proofs related to it.

. A good value for dropout in a hidden layer is between 05 and 08. When you are training your model through machine learning with the help of. L1 regularization or Lasso Regression.

The default interpretation of the dropout hyperparameter is the probability of training a given node in a layer where 10 means no dropout and 00 means no outputs from the layer. A Simple Way to Prevent Neural Networks from Overfitting download the PDF. Data scientists typically use regularization in machine learning to tune their models in the training process.

I have covered the entire concept in two parts. Moving on with this article on Regularization in Machine Learning. It is a form of regression that shrinks the coefficient estimates towards zero.

It is a technique to prevent the model from overfitting by adding extra information to it. Input layers use a larger dropout rate such as of 08. Regularization is one of the basic and most important concept in the world of Machine Learning.

This happens because your model is trying too hard to capture the noise in your training dataset. Regularization in Machine Learning. Machine learning involves equipping computers to perform specific tasks without explicit instructions.

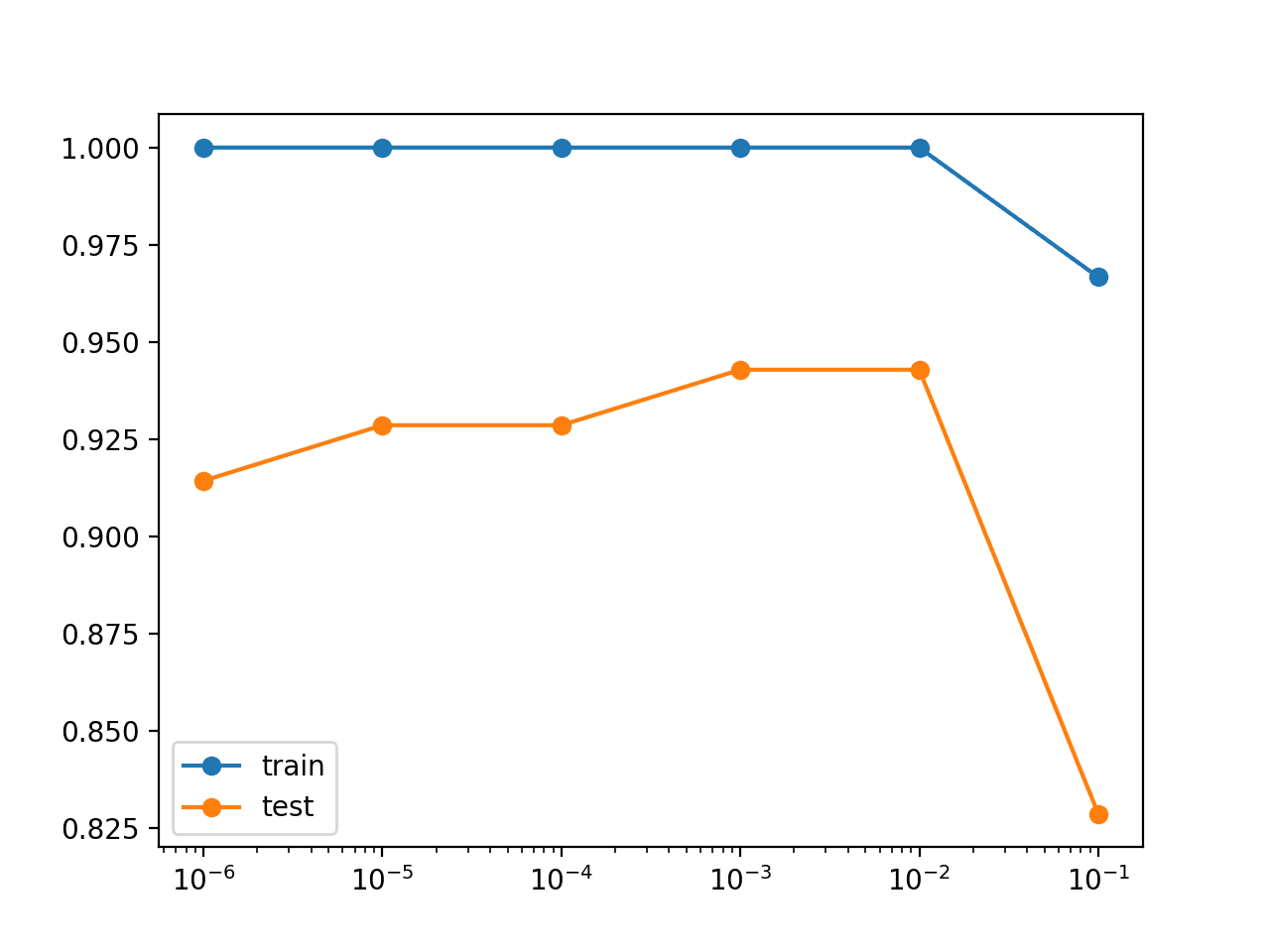

L2 regularization or Ridge Regression. In simple words regularization discourages learning a more complex or flexible model to prevent overfitting. You can refer to this playlist on Youtube for any queries regarding the math behind the concepts in Machine Learning.

It normalizes and moderates weights attached to a feature or a neuron so that algorithms do not rely on just a few features or neurons to predict the result. Regularization is the most used technique to penalize complex models in machine learning it is deployed for reducing overfitting or contracting generalization errors by putting network weights small. In other words this technique forces us not to learn a more complex or flexible model to avoid the problem of.

In their 2014 paper Dropout. Let us understand this concept in detail. It means the model is not able to.

I have learnt regularization from different sources and I feel learning from different. Optimization function Loss Regularization term. In the context of machine learning regularization is the process which regularizes or shrinks the coefficients towards zero.

If the model is Logistic Regression then the loss is. Regularization is essential in machine and deep learning. Setting up a machine-learning model is not just about feeding the data.

In this post you will discover activation regularization as a technique to improve the generalization of learned features in neural networks. This article focus on L1 and L2 regularization. Equation of general learning model.

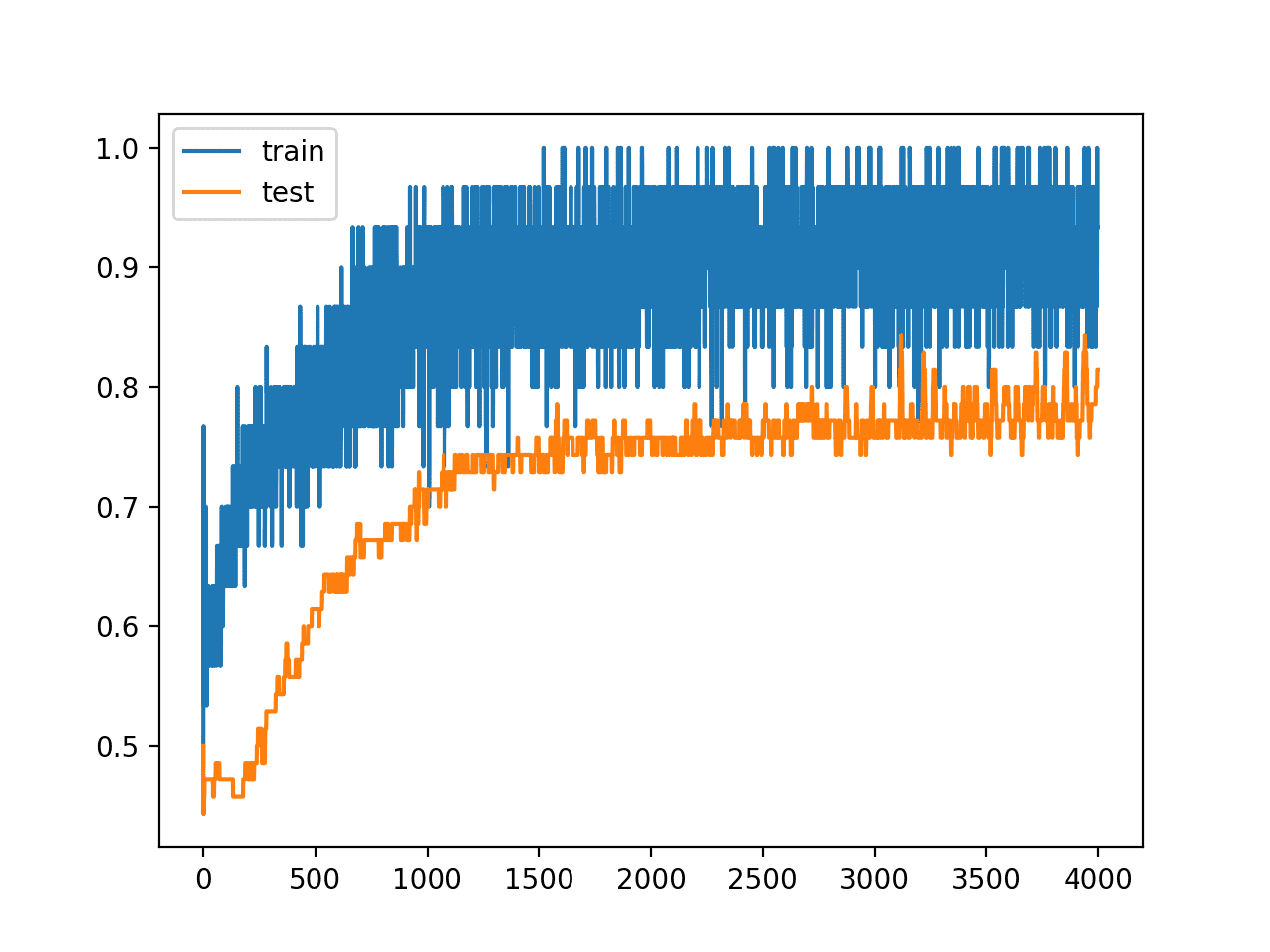

A regression model. Regularization is used in machine learning as a solution to overfitting by reducing the variance of the ML model under consideration. Sometimes the machine learning model performs well with the training data but does not perform well with the test data.

Activity or representation regularization provides a technique to encourage the learned representations the output or activation of the hidden layer or layers of the network to stay small and sparse. Dropout Regularization For Neural Networks. Dropout is a regularization technique for neural network models proposed by Srivastava et al.

The cheat sheet below summarizes different regularization methods. Sometimes one resource is not enough to get you a good understanding of a concept. Regularization helps us predict a Model which helps us tackle the Bias of the training data.

Regularization in Machine Learning is an important concept and it solves the overfitting problem. Concept of regularization. The term regularization refers to a set of techniques that regularizes learning from particular features for traditional algorithms or neurons in the case of neural network algorithms.

It is not a complicated technique and it simplifies the machine learning process. Using cross-validation to determine the regularization coefficient. Regularized cost function and Gradient Descent.

The model will have a low accuracy if it is overfitting. Regularization in Machine Learning What is Regularization. Also it enhances the performance of models.

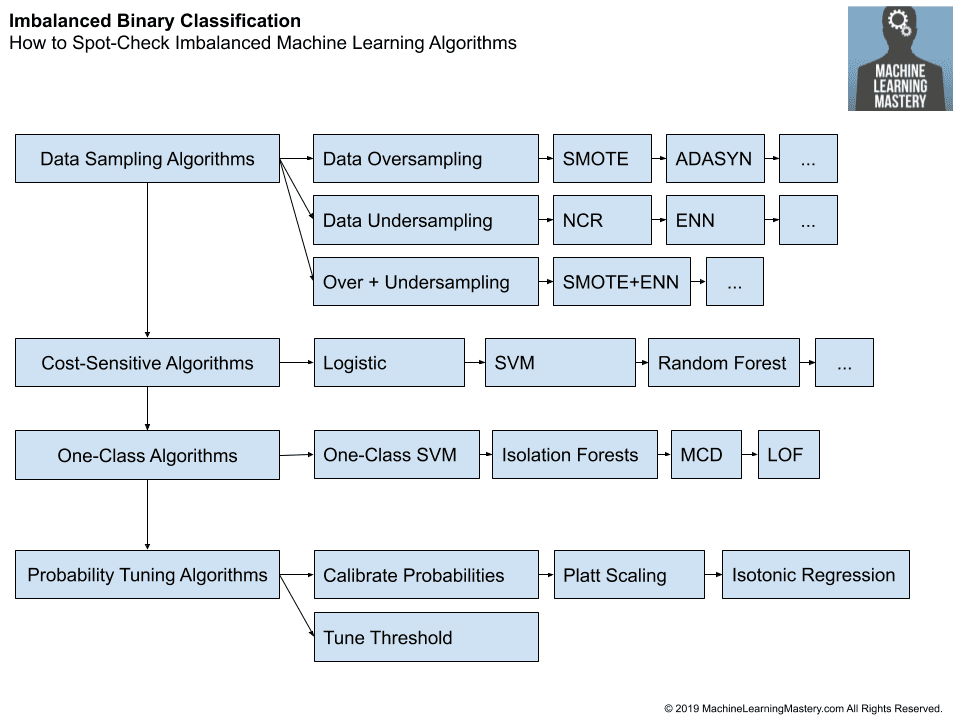

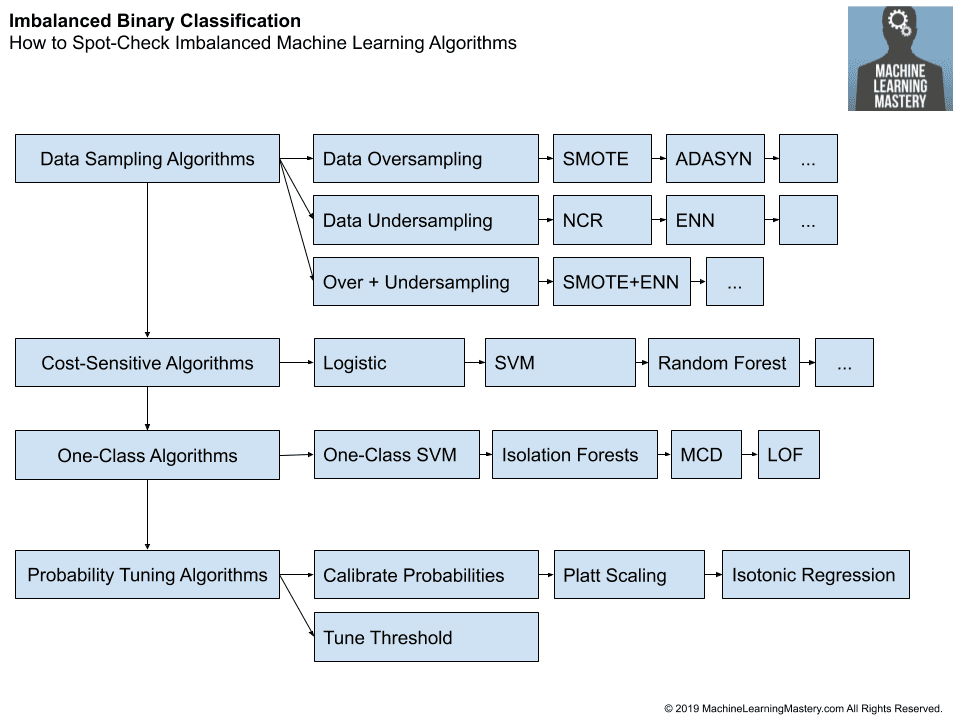

So the systems are programmed to learn and improve from experience automatically. Regularization can be implemented in multiple ways by either modifying the loss function sampling method or the training approach itself. A Machine Learning model is said to be overfitting when it performs well on the training dataset but the performance is comparatively poor on the testunseen dataset.

Regularization is a technique used to reduce the errors by fitting the function appropriately on the given training set and avoid overfitting. Dropout is a technique where randomly selected neurons are ignored during training. The ways to go about it can be different can be measuring a loss function and then iterating over.

By Data Science Team 2 years ago. A regression model that uses L1 regularization technique is called Lasso Regression and model which uses L2 is called Ridge Regression. This technique prevents the model from overfitting by adding extra information to it.

It is one of the most important concepts of machine learning. Regularization is one of the most important concepts of machine learning. By noise we mean the data points that dont really represent.

It is very important to understand regularization to train a good model. The key difference between these two is the penalty term. A regression model which uses L1 Regularization technique is called LASSO Least Absolute Shrinkage and Selection Operator regression.

Ridge regression adds squared magnitude of coefficient as penalty term to the loss function.

Types Of Machine Learning Algorithm

Deep Learning With Python Jason Brownlee Sale Online 57 Off Www Vetyvet Com

Github Dansuh17 Deep Learning Roadmap My Own Deep Learning Mastery Roadmap

Linear Regression For Machine Learning

Machine Learning Mastery Calculus Book Released R Learnmachinelearning

Deep Learning With Python Jason Brownlee Sale Online 57 Off Www Vetyvet Com

![]()

Machine Learning Mastery Workshop Enthought Inc

Various Regularization Techniques In Neural Networks Teksands

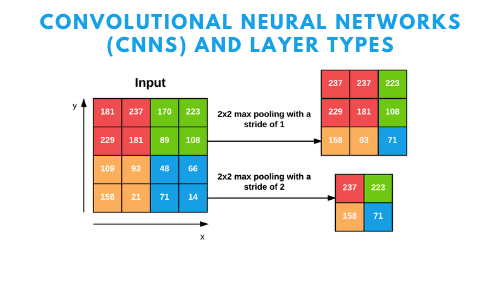

Convolutional Neural Networks Cnns And Layer Types Pyimagesearch

Weight Regularization With Lstm Networks For Time Series Forecasting

What Is Regularization In Machine Learning

Machine Learning Mastery With R Get Started Build Accurate Models And Work Through Projects Step By Step Pdf Machine Learning Cross Validation Statistics

Day 3 Overfitting Regularization Dropout Pretrained Models Word Embedding Deep Learning With R

Start Here With Machine Learning

A Tour Of Machine Learning Algorithms

A Gentle Introduction To Dropout For Regularizing Deep Neural Networks

A Gentle Introduction To Dropout For Regularizing Deep Neural Networks

The Machine Learning Mastery Ebook Bundle Down To 20 From 223 9 Ends In 1 Day Anyone Have Experience With These Books R Learnmachinelearning

A Gentle Introduction To Dropout For Regularizing Deep Neural Networks